- Guts Workout: Train Like GUTS From Berserk

- Fitness and Mental Health – How it Helps and How it Goes Bad

- Kettlebells Transform Your Body Unlike ANYTHING Else – Huge Benefits Explained

- How Sitting, Stress, and Clothes Destroy Our Bodies

- Why Steroids Are NOT Functional – Don’t Trade Your Health for Muscle

- How to Keep Leveling Up INFINITELY – Like Sung Jin-Woo

- The Ideal Physique is Easy for Most Guys When They Learn This – Toji Workout

- How to Train Your FOOT Muscles for Balance, Power, & Injury Prevention

- How to Do Sit Ups CORRECTLY for Ripped, Powerful Abs

- How to Train Your Nervous System Like a NINJA

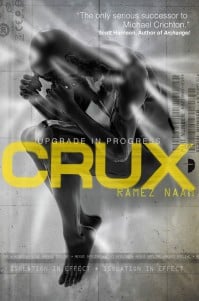

Building a Superintelligence: AI vs Exo Cortex

On this blog I am interested in all things that can improve human capabilities. That includes training and methods that can make us stronger, faster and smarter right now. But it also includes future possibilities and transhuman technologies that might create legitimate superhumans in the coming decades.

The singularity is defined as a future point in time where technological progress reaches a critical point and becomes self-perpetuating. Usually, this is expected to be the result of the advent of a superintelligence: an AI so powerful that it can build virtual worlds, create other AIs, increase its own cognitive abilities and ultimately become the catalyst to unheard of advancements in science and technology.

Cool, right?

But I think the reality will be cooler. I think this reality will be brought about by super-intelligent humans. Here’s why…

Weak AI and Machine Learning

Part of my argument is that AI is moving too slowly to reach this point. Prominent minds like Elon Musk and Stephen Hawking genuinely believe that AI has the potential to go rogue in our lifetimes. I just don’t see this happening.

AI is everywhere right now. It is in your phone and it is in the computer games you play. But this AI isn’t all that exciting and is generally considered to be ‘weak AI’ or ‘narrow AI’. Sometimes this can also be referred to as ‘reactive AI’. This is the kind of AI that is designed to solve a specific problem or to serve a particular role. Another example is the self-driving car.

Despite being incredibly sophisticated technologies, these devices still rely on pre-programmed responses to situations and thus have no decision making capabilities. When you fight a bad guy in a computer game, it responds to your movements using a flow-chart. If you go left, it parries right. If you back away, it moves forward. It doesn’t do anything in its down time (unless programmed to do so), it doesn’t have inner thoughts and there is zero threat that it will go rogue. It is entirely predictable.

The most advanced examples of weak AI – which is basically the point we’re at right now – utilize machine learning in order to create infinitely more complex decision trees and to ‘learn’ new behaviors. In reality thogh, this has as much to do with data and statistics as it does with AI. For example, computer vision gives applications like the new Google Lens the ability to recognize physical objects in the real world. It does this by looking for patterns in gigantic data sets. Google has seen enough variations in color and shape to know what a bottle looks like. It may even be able to point out other bottles or recommend other things you might be interested in (cups?). But that still doesn’t mean it understands the nuances of a bottle. That it knows that you can fill a bottle with liquid. It can’t choose what it learns next and it is not genuinely interested. This is simple trial and error, simple pattern recognition.

But then again, if you believe in a purely behavioural explanation of human cognition… well then we’re getting there.

And don’t write this off just because it doesn’t sound like a good Hollywood blockbuster: machine learning will help us to cure diseases, to fix the economy and to introduce countless new technologies. It’s just that we are always going to need to be at the driving wheel.

General AI and Consciousness

The kind of AI that is depicted in science fiction though is ‘general AI’. This is AI that aims to mimic human behaviour and ultimately to be able to do everything we can do. This kind of AI should, at the very least, be able to pass the Turin test – meaning that it could hold a conversation with another person without them realizing they’re talking to a computer.

The closest we have to this kind of thing would be an example like DeepMind. DeepMind is a company that has developed a neural network that employs general learning algorithms to do things like play computer games and hold stilted conversations. Unlike other attempts like IBM’s Deep Blue or Watson, DeepMind claims their solution is not in any way pre-programmed. Instead, it learns to think and respond via reinforcement based on a ‘convolutional neural network’. It’s amazing technology, but it’s not fooling anyone into thinking it’s human.

Something like this could certainly have potential to make rapid breakthroughs in science and technology. It’s incredibly exciting too to think that big companies have got huge AIs like this potentially to someday help with their R&D (DeepMind is owned by Google). But this is not conscious. It lacks the sentience, self-awareness or sapience to be considered alive. And it lacks the introspection to be able to create other AIs, or to improve its own cognitive abilities.

Something like DeepMind can mimic the behavioural model for learning. Behavioural psychologists believed that all human experience and ability was the result of trial and error with certain actions and thoughts being ‘reinforced’ by positive stimulus. We start by learning that reaching food gets us food and from there we layer more and more complex behaviours.

But more recent theories like cognitive psychology look at the all important ability to create internal models and to run mental simulations. The ability to reason and to choose. This is fundamental to our human experience and it is what is lacking in something like DeepMind.

Emergent Intelligence

The problem is, we have no idea how to create a truly conscious and self-aware intelligence because we have no idea how that awareness came to be in humans. To me, consciousness is best measured by the ability to make a completely unpredictable choice – how can you program something to do something it isn’t programmed to do? We have no model for our own consciousness, so how can we build it into a machine?

I have a hunch though as to how we might go about this. The current ‘cheat’ answer as to what consciousness is, is that it is an emergent property of the human mind. Given the right set of circumstances, given the right tools, consciousness just kind of happens. This is cheating because it doesn’t tell us anything about how it works but perhaps that’s fine – perhaps that’s all we need to know in order to create our own AI.

I find it kind of weird that our attempts to build an AI are starting with the most complex form of AI possible: human intelligence. Why try to create a human intelligence when we could start with something much simpler: like a dog, or a bug. These creatures are undoubtedly conscious but with far less ‘computational power’ required.

And to allow for simpler organisms to occur, I suggest that we just need to create the right circumstances for them to occur in. In other words, we need to create a simulation and use that simulation to simulate evolution. And the amazing thing is that from there, we could reverse engineer the DNA of life.

In fact, some prominent thinkers like Elon Musk (again) and Nick Bostrum (credited with suggesting the theory) believe that we are already living in a simulation. They believe that seeing as a single world could create millions of simulations, that means it is statistically most probably that we too are living inside such a simulation.

Companies like Improbable are working on solutions like SpatialOS, in order to try and create realistic and highly complex simulations drawing on cloud computing power to achieve things like object permanence and infinitely scalable computational models. They’ve already successfully recreated simulations of Cambridge complete with traffic and a pedestrian footfall.

Cambridge is very busy, I’ve been.

Conway’s Game of Life

But you wouldn’t even need to go this complicated. Conway’s Game of Life is a boardgame/basic computer simulation that relies on very simple rules. The game presents a grid of any size with a scattering of ‘cells’ (points on the grid).

Each turn or cycle, all cells that have fewer than two neighbours (touching cells) will be eliminated. Any cells with two or three live neighbours survive. Cells with more than three neighbours die. And empty spaces surrounded by three neighbours become new cells.

This sounds simple but when you accelerate the turns over thousands of goes on a large enough grid, you begin to see incredible things happy. Early on, certain patterns appear that manage to survive for multiple turns. Some of these travel across the screen, some shoot projectiles and others even reproduce. These have names like the ‘boat’, the ‘tub’, the ‘toad’.

These are optimal configurations and while everything dies out, they proliferate.

And using these simple rules, people have been able to create their own starting configurations to create things as complex as calculators!

In other words, this shows that highly complex behaviour and creations can be born from very simple rules – which is kind of how our own world works. Given an infinitely sized grid, things like the calculator would be destined to appear as a statistical certainty with no human input. Not only that, but it’s not a huge stretch to imagine that other creations more complex still could emerge. Perhaps some that were capable of basic evolution and eventual decision making?

Embodied Cognition

My current favourite theory of human cognition is embodied cognition. This is a theory that states that all the inner workings of our minds exist as a direct result of our interactions with the world around us. I’ve written about this before, but in short, we only understand what people say to us because we are able to draw on our own experience in the world. Even abstract ideas like math and even our own imaginations likewise rely on this initial experience. This is what gives our thoughts context and it is what gives us motivation and purpose.

In short then, it makes most sense to me that intelligence is dependent on its ability to interact with the world around it, which is why a disembodied learning algorithm seems to me unlikely to gain consciousness. It’s my prediction that we’ll first stumble upon a true hard AI in a computer game setting, quite by accident. But I don’t think this will happen for a long time.

Exocortices

What I think will happen much sooner is the advent of the exocortex. An exocortex is a hypothetical external device that can augment human thinking. Literally an external brain. Like plugging an external GPU into your PC to get more graphics power, this would allow you to wear a helmet and thus become a genius.

This is a fascinating concept and not one that is explored often. The only example of an exocortex in popular fiction that comes to mind is ‘Cerebro’, the helmet used by Professor Xavier in X-Men to enhance his psychic powers.

In reality, something like this could allow us to store all our memories and never forget anything, to relive past encounters in vivid detail, to store much more information in our working memory, to interface directly with machines and to draw on information from the web as though we already knew it.

Is that incredibly far-fetched? Not hugely. Neuroscientists are already beginning to make breakthroughs in their ability to decode parts of the brain such as the visual cortex, where they can decipher what participants are viewing and believe they could soon recreate the ‘movies in their mind’ in piecemeal (reference). Yes, in theory, this technology could be used to create videos of our dreams. For a while now, we’ve been able to allow paralyzed patients to control mouse cursors with their thoughts and more. Blind patients are being helped to see in low resolution via stimulation of the visual cortex. We’re slowly amassing enough information to enable a basic understanding of the input and output of the human brain. No doubt, machine learning will only accelerate this process.

It will be a while before anyone is willing to have a chip implanted into their brain to individually stimulate select neurons (though there are already some precedents for this – such as implants to control epilepsy and depression) but we’re moving towards technologies to do this transcranially using electromagnetism. Not only that, but an exocortex needn’t necessarily even have an input. If a headset worn in front of the eyes was capable of detecting your thoughts and then showing you the information you need with no conscious effort on your part… that would make a huge impact on your ability to work and reason. Or how about if it could hold information you need to remember, again without your having to consciously instruct it to do so?

Studies looking at monkeys given extra robotic limbs show us how the brain would be quick to adapt to this technology thanks to brain plasticity. This could yield entirely new ways of thinking and thus, entirely new thoughts, entirely new breakthroughs.

Basic forms of AI could eventually be incorporated into such systems to create even more advanced superintelligences and this I believe will happen long before we see the emergence of a truly advanced, sentient, general intelligence.

That’s why I believe the first superintelligence will be a human-machine hybrid.

So… yep.